Many of those who reach old age don’t enjoy the condition much. Those who tend to them, their caregivers, often wish they could do something else with their time.

A few years ago, I and (mostly) my wife, served as caregivers for my mother-in-law. As a scifi writer, I wondered if technology might help ease the burden for other caregivers someday.

I wrote a short story, “Its Tender Metal Hand,” about a caregiver robot of the near future. That story appears in the new anthology by Cloaked Press, Spring into Scifi, now available.

The Need

With human lifespans lengthening and the large Baby Boom generation reaching old age, the need for caregivers grows daily. Worsening the problem, the current labor shortage reduces the supply of potential workers in the field. The recent deaths of actor Gene Hackman and his caregiver wife, Betsy Arakawa, showcased the importance of the caregiver role.

The Tasks

A caregiver becomes a jack-of-all-trades, though few tasks rate high in difficulty—for humans. A good caregiver should:

- Remind about, and provide, medication;

- Navigate the patient around the home and yard;

- Provide companionship via conversation;

- Play games;

- Perform necessary housework;

- Clean and bathe the patient;

- Monitor symptoms; and

- Administer first aid if necessary.

The ideal, more advanced, caregiver might also:

- Lift, reposition, and physically move the patient;

- Perform medical tasks such as taking vital readings, and drawing blood;

- Conduct physical therapy; and

- Conduct psychological therapy.

The Current State

No single robot exists today that performs all those tasks. Some robots perform one or a few of the functions, but a true, general purpose caregiver robot awaits future development.

Today’s caregiver robots include: Aibo by Sony, ASIMO by Honda, Baxter by Rethink Robotics, Care-O-Bot 4 by Fraunhofer IPA and Mojin Robotics, Dinsow Mini 2 by CT Robotics, ElliQ by Intuition Robotics, Grace by Hanson Robotics, Human Support Robot (HSR) by Toyota, Mabu by Catalia Health, Mirokaï by Enchanted Tools, Moxi by Diligent Robotics, Nadine by NTU Singapore, NAO by Aldebaran Robotics, Paro by Japan’s National Institute of Advanced Industrial Science and Technology, Pepper by Aldebaran Robotics, Pria by Pillo Health and Stanley Black & Decker, Ruyi by NaviGait, and Stevie by Akara Robotics.

The Difficulties

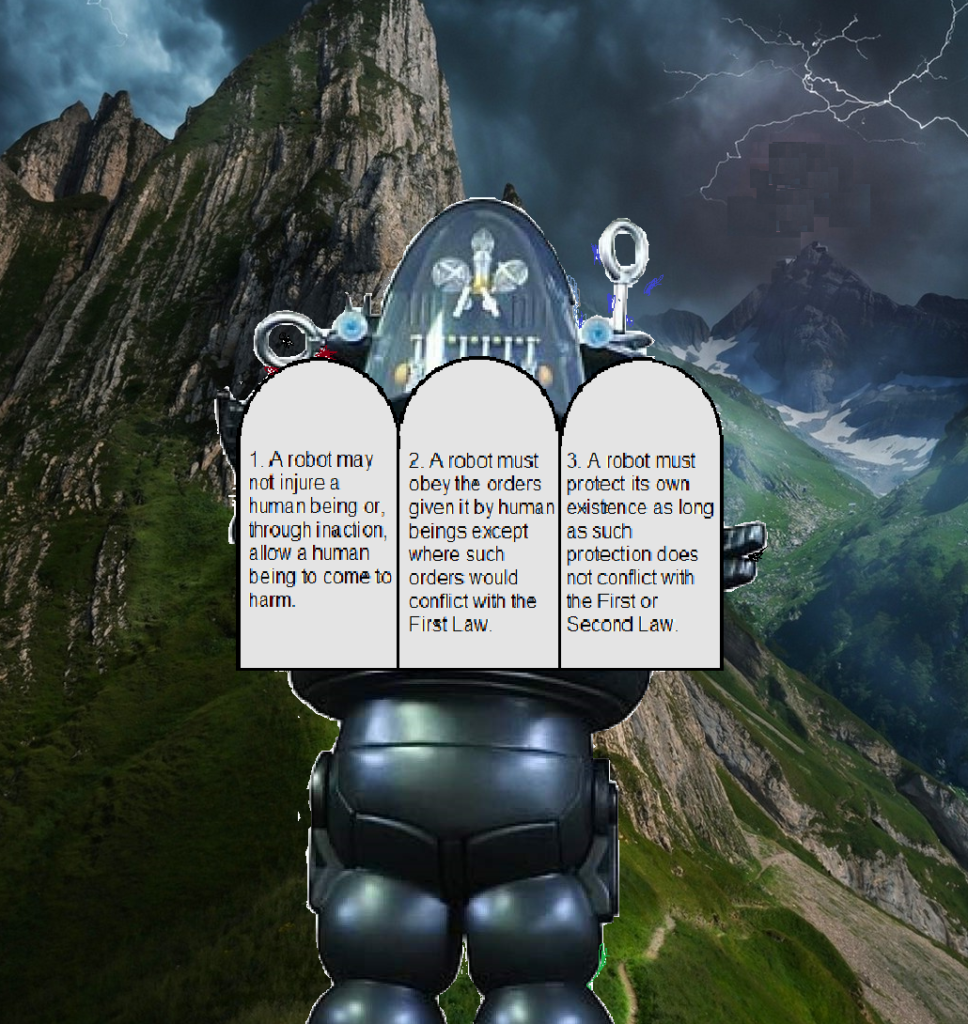

Robots have advanced in capability, but still struggle with tasks humans find easy, and excel at some things people find problematic.

Two examples of the latter category occur to me. As mentioned in my previous blogpost, a robot will listen with patience to repeated re-tellings of the same story, and a sturdy robot could lift a heavy patient without spinal strain.

Also, certain tasks, even if robotically possible, present serious consequences if done wrong. For safety reasons, substantial testing must occur before permitting robots to perform medical tasks or to lift patients.

Perhaps the most elusive task for a caregiver robot, the last one to be achieved, will be to exhibit a truly human connection, a deep, sympathetic friendship bond.

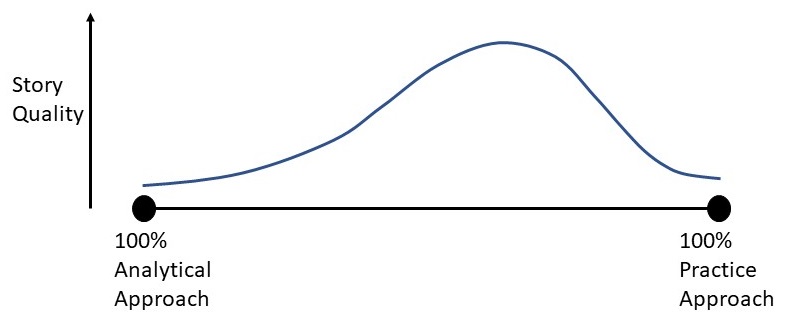

Fictional Treatment

Movies have explored the concept of caregiver robots in various ways. Bicentennial Man and I, Robot touch on the idea. Big Hero 6 and Robot and Frank delve deeper, with caregiver robots integral to their plots.

I’m unfamiliar with two other caregiver robot movies: Android Kunjappan Version 5.25 or its remake, Koogle Kuttappa.

My story, “Its Tender Metal Hand,” features a general-purpose caregiver robot capable of most of the tasks mentioned above. However, it lacks an emotional bond, an understanding of the human condition.

But maybe it can learn.

Perhaps an advanced, capable caregiver robot lies in the future for—

Poseidon’s Scribe