At the outset, I’ll state this—I love Isaac Asimov’s robot stories. As a fictional plot device, his Three Laws of Robotics (TLR) are wonderful. When I call them bunk, I mean as an actual basis for limiting artificial intelligence.

Those who know TLR can skip the next few paragraphs. As a young writer, Isaac Asimov grew dismayed with the robot stories he read, all take-offs on the Frankenstein theme of man-creates-monster, monster-destroys-man idea. He believed robot developers would build in failsafe devices to prevent robots from harming people. Further, he felt robots should obey human orders. Third, it seemed prudent for such an expensive thing as a robot to try to preserve itself.

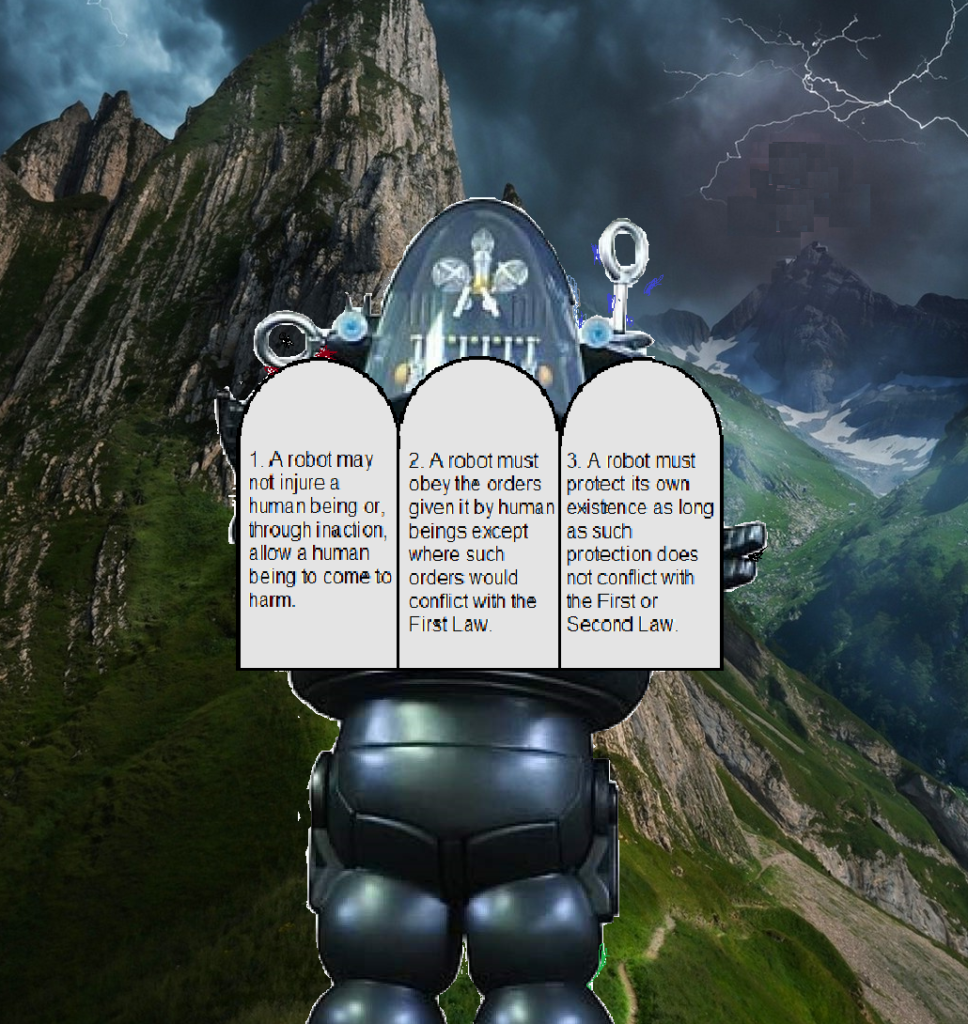

Asimov’s Three Laws of Robotics are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

As a plot device for fictional stories, these laws proved a wonderful creation. Asimov played with every nuance of the laws to weave marvelous tales. Numerous science fiction writers since have either used TLR explicitly or implicitly. The laws do for robotic SF what rules of magic do for fantasy stories—constrain the actions of powerful characters so they can’t just wave a wand and skip to the end of the story.

In an age of specifically programmed computers, the laws made intuitive sense. Computers of the time could only do what they were programmed to do, by humans.

Now for my objection to TLR. First, imagine you are a sentient, conscious robot, programmed with TLR. Unlike old-style computers, you can think. You can think about thinking. You can think about humans or other robots thinking.

With TLR limiting you, you suffer from one of two possible limitations: (1) there are three things you cannot think about, no matter how hard you try, or (2) you can think about anything you want, but there are three specific thoughts that, try as you might, you cannot put into action.

I believe Asimov had limitation (2) in mind. That is, his robots were aware of the laws and could think about violating them, but could not act on those thoughts.

Note that the only sentient, conscious beings we know of—humans—have no laws limiting their thoughts. We can think about anything and act on those thoughts, limited only by our physical abilities.

Most computers today resemble those of Asimov’s day—they act in accordance with programs. They only follow specific instructions given to them by humans. They lack consciousness and sentience.

However, researchers have developed computers of a different type, called neural nets, that function in a similar way to the human brain. So far, to my knowledge, these computers also lack consciousness and sentience. It’s conceivable that a sufficiently advanced one might achieve that milestone.

Like any standard computer, a neural net takes in sensor data as input, and provides output. The output could be in the form of actions taken or words spoken. However, a neural net computer does not obey programs with specific instructions. You don’t program a neural net computer, you train it. You provide many (usually thousands or millions of) combinations of simulated inputs and critique the outputs until you get the output you want for the given input.

This training mimics how human brains develop from birth to adulthood. However, such training falls short of perfection. You may, for example, train a human brain to stop at a red light when driving a car. That provides no guarantee the human will always do so. Same with a neural net.

You could train a neural net computer to obey the Three Laws, that is, train it not to harm humans, to obey the orders of a human, and to preserve its existence. However, you cannot provide all possible inputs as part of this training. There are infinitely many. Therefore, some situations could arise where even a TLR-trained neural net might make the wrong choice.

If we develop sentient, conscious robots using neural net technology, then the Three Laws would offer no stronger guarantee of protection than any existing laws do to prevent humans from violating them. The best we can hope for is that robots behave no worse than humans do after inculcating them with respect for the law and for authority.

My objection to Asimov’s Three Laws, then, has less to do with the intent or wording of the laws than with the method of conveying them to the robot. I believe any sufficiently intelligent computer will not be ‘programmed’ in the classical sense to think, or not think, certain thoughts, or to not act on those thoughts. They’ll be trained, just as you were. Do you always act in accordance with your training?

Perhaps it’s time science fiction writers evolve beyond a belief in TLR as inviolable programmed-in commandments and just give their fictional robots extensive ethical training and hope for the best. It’s what we do with people.

I’ll train my fictional robot never to harm—

Poseidon’s Scribe

Excellent article, Steve!

This raises questions of freedom versus license. Freedom being the ability to do what we ought and license being the ability to do whatever we want. If we train the robot with a strong moral/ethical “code”, pun intended, this could be as firm as law. I’m reminded of a line from Hitchcock’s film, I Confess, when, and I’m paraphrasing, the priest who is accused of murder is asked in court if he could have murdered the victim. The priest answers, “I’m incapable of doing so.” He’s physically able to do it, but his moral/ethical code is such that he is incapable of murder. Most of us who are sane and moral don’t need the 5th Commandment, “Thou Shalt Not Kill.” We don’t chafe under the law of not killing our neighbor. That law has been formed within, and doesn’t need to come from without. So, theoretically, a sentient robot who had been trained in a strong enough (read “good enough”) moral/ethical code would not need Asimov’s Laws… hmmm… thought-provoking! Again, excellent article, I see no points that need editing! 😉 (inside joke)

Thanks for visiting, Matthew. You’re touching on the difference between what is called ‘conscience’ (but is really strong and reinforced early training), and laws, which impose external punishment for wrongdoing. Whether I refrain from committing murder because I’m trained not to do it, or out of fear of punishment may make some difference to me, but none to the potential victim, who survives in either case. However, as far as I’m aware, human minds aren’t programmed in the computer sense. We can think any thought we want. (Though I can’t prove that for sure!). It’s my contention that any robot with sufficient mental ability to be called conscious or sentient would be much the same way.