Though I usually blog about writing, today I’ll depart from that to focus on a fun historical story for the holiday season.

Among my late father’s belongings, I found a scrapbook of family history compiled by my aunt. It included an article copied from “A Lake Country Christmas,” Volume 2, 1983, pages 3 and 4. Written by Cindy Lindstedt, the article bore the title “Christmas Memories: The Southard’s of Delafield.”

It concerns a Wisconsin farming family living in a house with a prominent mulberry tree. The article singles out a man named John Southard (called ‘Papa’) and his children Margaret, Grace, and Bob, during a particular Christmas in 1938 or ’39. Here’s a paragraph from the article:

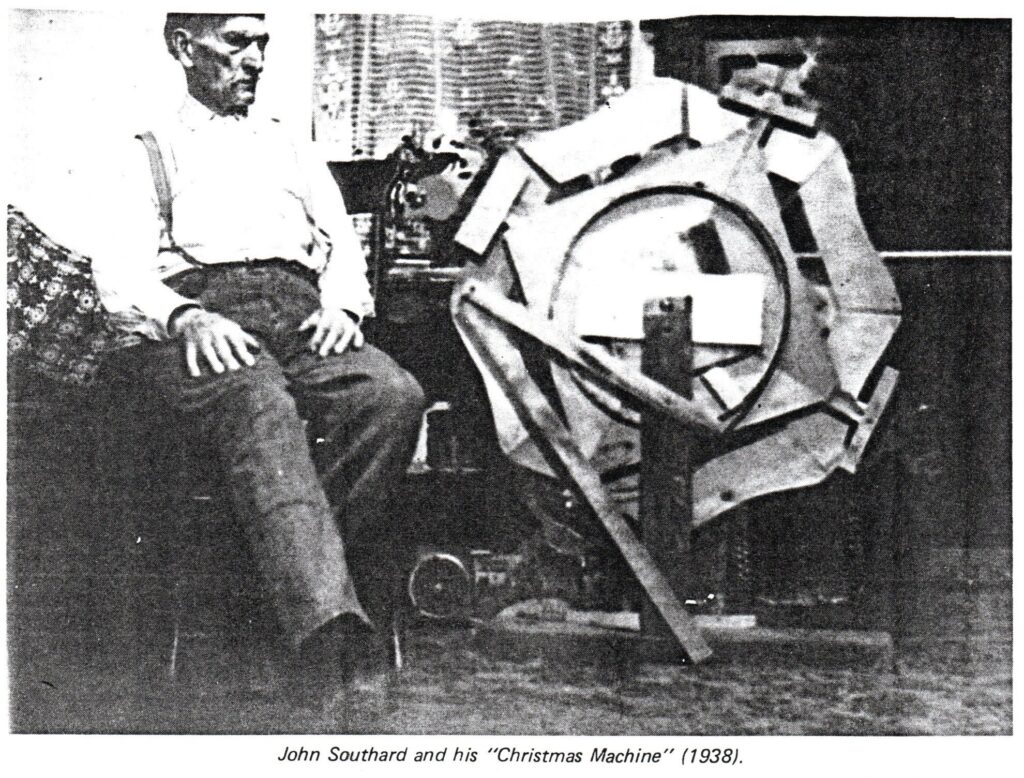

“The strangest memory is the day in 1939 when Papa, then in his 70’s and dependent upon a wheelchair to get around, engineered his special Christmas project. With a bushel basket hoop as a foundation, he called for various sizes of wood. The mystified but obedient children complied with his requests, and Papa pounded and puttered. Margaret was asked to cut and paint three plywood reindeer and a Santa and a sleigh. Soon the finished product was unveiled: a Delco-powered, motorized Santa who sent his reindeer “leaping over the mountain” in front of his sleigh, as the large, circular contraption rotated vertically. Although Grace, Margaret and Bob were in their twenties, squeals of delight rustled the branches of the Mulberry tree that day the wonderful machine first rumbled into motion (and many Christmas seasons hence, whenever the invention has been viewed by later generations).”

Children of today might spend fifteen seconds watching such a machine spin before asking if it made sounds or lit up, then lose interest. During the Great Depression, though, when electrical machinery was rare and expensive, a time before mass-marketed toys, even a crude rotating wheel would entertain a whole family.

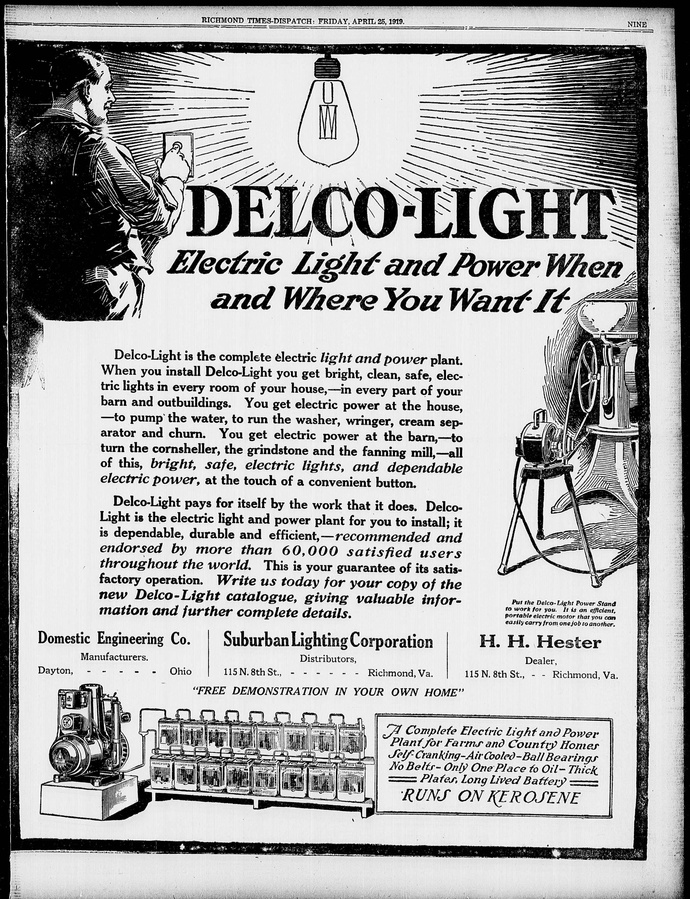

One phrase in the article stood out to me—Delco-powered. Today, we know AC Delco as a General Motors-owned company making automotive parts including spark plugs and batteries. At first, I assumed Papa Southard’s Christmas Machine drew its power from a car battery.

However, the term ‘Delco-powered’ probably meant something different in the late 1930s, something that would have been remembered in those rural communities in the 1980s when the article appeared. In the decades before electric lines stretched to every remote house, Delco sold a product called “Delco-Light,” a miniature power plant for a farm. A kerosene-fueled generator charged a bank of batteries to run electric equipment inside the home. I believe the article referred to a device like that.

I’m a little unsure of my relationship to Papa. I had a great-grandfather named John Southard, who lived in that area and would have been about the right age at that time. However, John is a common first name and many Southards lived in that region of Wisconsin. My grandfather wasn’t named Bob (the only son of Papa mentioned in the article). Moreover, my own father would have been seven or eight years old when Papa built the Christmas Machine, and my dad never mentioned it, though he wrote a lot about his childhood.

Still, it’s interesting to think about a time when a wheelchair-bound tinkerer in his eighth decade would cobble together a mechanical/electrical wheel to entertain his family at Christmas time. Can’t you just hear that motor hum and the wood creak, and see the three reindeer leading the way, pulling Santa’s sleigh up, down, and around?

Leaving you to imagine that, I’ll wish you a Merry Christmas from—

Poseidon’s Scribe